When people talk about cloud storage Amazon S3 is often the first name they think of. It is famous for storing a very large amount of data safely. It also connects well with the Amazon AWS system.

But as more and more businesses move to the cloud many start looking for other options. They want cloud storage that is cheaper, faster, easier to follow data rules or more flexible. Because of this there are now many strong alternatives to Amazon S3.

If you are a startup and want low cost storage or a business that must follow strict data rules or a big company that wants to use more than one cloud then you will find many good choices apart from S3.

In this blog, we’ll explore the top 10 Amazon S3 alternatives and competitors in 2026. We'll look at their key features, benefits, pricing, and when each option might be the best fit for your cloud storage needs.

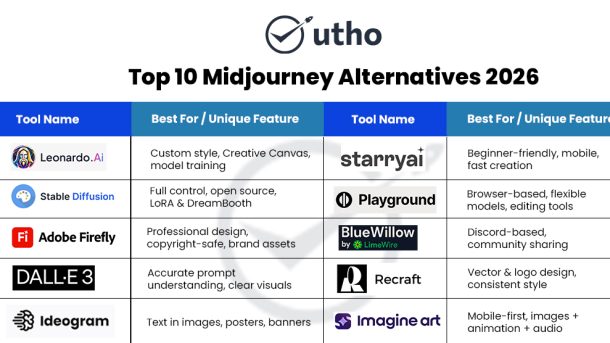

Here’s the list:

- MinIO – The High-Performance Open-Source S3 Alternative

- Microsoft Azure Blob Storage

- High Performance Cloud Object Storage by Utho

- Backblaze B2 Cloud Storage

- IBM Cloud Object Storage – Very Simple Version

- DigitalOcean Spaces – Simple Cloud Storage for Everyone

- Linode Object Storage (Akamai Cloud) – Very Simple Version

- Oracle Cloud Object Storage – Strong and Easy Cloud Storage for Big Companies

- Alibaba Cloud OSS – Simple and Strong Cloud Storage

- Wasabi Hot Cloud Storage – Simple Version

Why Do Companies Look for Amazon S3 Alternatives

Amazon S3 is very popular. Many companies use it to store, manage and access data. But still many businesses look for other providers. This is not only about technology. It is also about price speed, legal rules and flexibility. Let us see the main reasons.

1. Cost

One big reason is cost. Amazon S3 is good and reliable but it can get costly very fast as the amount of data grows. If a company moves or accesses data often the bills can go much higher. Other providers give similar storage at lower cost and help businesses save money.

2. Data Rules

Some businesses must follow rules about where their data is kept. For example GDPR in Europe or data rules in India say that data must stay inside the country. If businesses use a local cloud provider they can follow the law and also build trust with customers by keeping data safe.

3. Speed and Latency

Speed is also very important. If a company uses servers that are far away it can take time to get the data. This delay is called latency. Local or regional providers can give faster access. This is very useful for apps that need real time data or quick updates.

4. Support and Customization

Support and flexibility are another reason. Big cloud providers give basic support which may feel slow. Some smaller providers give personal support and direct contact with engineers. They also make custom solutions for each business. This makes it easier for companies to use the cloud in the best way for their needs.

5. Multi Cloud Strategy

Many companies do not want to depend only on Amazon S3. If they use only one provider it can be hard to move later. Using more than one cloud gives more freedom. It also reduces risk and improves backup and recovery. This way businesses can spread their work and have better control.

Amazon S3 (Simple Storage Service)

Amazon S3 is a very famous cloud storage service in the world. It is made by AWS which means Amazon Web Services. Small companies, big companies and even governments use it. The reason it is so famous is because it is safe, easy to use and can grow with your data.

What is Amazon S3

Amazon S3 is like a very big online hard drive. You can keep unlimited files, photos, videos backups or logs in it. The best part is you do not need to buy hardware or take care of machines. You only upload your data and Amazon will manage everything.

Easy Features of Amazon S3

Unlimited Storage

You can save as much data as you want. There is no limit.

Example → It can keep 10 photos or even 10 million videos.

Different Types of Storage

- S3 Standard → For data you use every day

- S3 Intelligent Tiering → Moves files you do not use often to cheaper storage

- S3 Glacier and Glacier Deep Archive → For very old files that you hardly use but still want to keep safe like old records

This system saves money because you only pay more for the data you use often.

Very Safe and Always Available

Amazon S3 keeps many copies of your files in many places. Even if one place stops working your data is still safe. They say S3 has eleven nines durability which means it is almost impossible to lose your files.

Strong Security

Amazon S3 keeps your data safe. It has password control data lock and follows rules like GDPR and HIPAA. You can decide who can see or download your files.

Easy to Connect

Amazon S3 works with many other AWS services like EC2 Athena and Redshift. Developers can also connect apps, websites and AI tools directly with S3.

Fast Speed

Amazon S3 gives your data very fast anywhere in the world. It also works with CloudFront which makes websites and apps open quickly for users everywhere.

Why Companies Use Amazon S3

- Startups use it to keep app data and media files

- Big companies use it for backup analytics and safety rules

- Media companies use it to keep videos and images for people around the world

- AI and ML teams use it to store very big data for training models

Best For

Amazon S3 is best for businesses that need safe and global storage. It is also best for companies already using AWS services because it connects very easily with them.

In Short

Amazon S3 is like the backbone of cloud storage. It is simple, powerful and trusted everywhere. But still some companies look for other options. Some want to save money, some need local data rules and some want special features.

1. MinIO – The High-Performance Open-Source S3 Alternative

In today’s cloud world data is like oil and the real challenge is how to manage it well. Old storage systems cannot keep up with the fast growth of data. This creates problems for speed cost and scaling.

This is where MinIO comes in.

MinIO is a fast open-source storage platform. It fully supports the Amazon S3 API. It is built for modern companies and helps in cloud apps AI and ML big data and important business tasks with great speed and easy use.

Unlike old storage MinIO is light but very powerful. It can handle very large amounts of data with high speed. Whether you are a startup building AI models or a big company running global tasks MinIO gives you the trust and freedom you need without getting locked to one vendor.

Key Strengths of MinIO

- Amazon S3 Compatibility

MinIO works fully with the Amazon S3 API. This means any app made for Amazon S3 can run on MinIO without changing the code. It makes adoption easy migration simple and causes no big problems for businesses already using S3.

- Extreme Performance at Scale

When speed is important MinIO delivers. It is made for fast data use and low delay. It works best for tasks where old systems are slow such as:

- AI and ML training data

- Big data and real time analytics

- Streaming and high speed apps

With MinIO you not only store data but also move processes and analyze it very fast.

- Easy Scalability

MinIO can start small on one server and grow to many servers handling petabytes of data. As your business grows MinIO grows with you. It keeps high performance and trust even when your data becomes very large.

- Kubernetes Native Storage

MinIO is built for the cloud world. It works natively with Kubernetes and fits into DevOps CI CD and container based apps. For developers making modern microservices MinIO is the best choice for storage.

5. Enterprise-Grade Security

Security is at the heart of MinIO. It provides:

- Server-side encryption for data at rest

- IAM-based access control with fine-grained permissions

- Policy enforcement to maintain compliance

- Audit logging for governance

This ensures that even the most sensitive enterprise data remains secure, compliant, and protected end-to-end.

Why Choose MinIO Over Amazon S3

Amazon S3 is a market leader but it has some trade offs like vendor lock in rising costs and less control over your setup. MinIO removes these problems.

Full Control – You decide where your data lives. Run it on your own servers on any cloud or on both. Move your data whenever you want. Keep it inside your country if the rules say so. Set your own backup policies. Upgrade when you are ready not when a vendor forces you.

Open Source Advantage – The code is open for everyone. A large community tests it and improves it every day. You can use it for free or pay for enterprise support if needed. You can switch anytime. There is no black box and no vendor lock in.

Cost Savings – Use simple hardware or your existing cloud nodes. Pay only for what you run. Avoid hidden fees for moving data out or making extra API calls. You can plan your monthly cost clearly. Grow step by step without surprise bills.

Performance Leadership – MinIO is built for speed. It works well with both small and very large files. Even when thousands of users read and write at the same time it stays fast. It is great for AI and ML training sets, real time analytics logs, videos and backups. Low delay makes apps feel faster and high throughput means large jobs finish quickly.

Kubernetes First – MinIO installs easily on Kubernetes. It fits smoothly into DevOps flows CI CD pipelines and microservices. As your cluster grows MinIO scales with it. Teams can automate setup updates and recovery with simple scripts.

Built in Security – Your data is safe when stored and also when moving across the network. Access keys and policies control who can see what. Versioning helps you restore old files if something is deleted or attacked.

The Future of Storage is Open and Cloud Native

For businesses that want the power of S3 without the limits of public cloud MinIO is the best fit. You can start small and grow to petabyte scale. Run it in your own data center in a private cloud or in any public cloud. Your apps do not need to change because the S3 API stays the same. That is real freedom.

If your business needs high speed scale and full control MinIO is not just an option it is the future of storage.

2. Microsoft Azure Blob Storage

Microsoft Azure Blob Storage is a cloud storage service made by Microsoft. It is just like Amazon S3. It is used to keep a very large amount of unstructured data like documents, photos, videos, backups and logs. Many big companies that already use Microsoft tools like it because it connects very smoothly with other Microsoft products and services.

Key Features in Simple Words

Different Storage Tiers

- Hot Tier → For data that you use every day like app files, daily business work or media. It costs a little more but it is very fast.

- Cool Tier → For data that you do not use every day but still need sometimes like monthly reports or old backups. It saves money compared to Hot Tier.

- Archive Tier → For data that you rarely use like old documents, legal records or very old backups. It is the cheapest option but it takes more time to restore files.

These storage types help companies save money and still keep their data safe.

Works Well with Microsoft Tools

Azure Blob connects very easily with Office 365 Microsoft Teams Azure AI and DevOps tools.

- Example → A company using Office 365 can connect its documents directly with Blob.

- Developers using Azure DevOps can store files for their projects.

- AI teams can use stored images or videos to train AI models.

Because of this Azure Blob is the natural choice for businesses that already use Microsoft products.

Strong Security

Azure Blob keeps your data safe in many ways.

- It encrypts files when stored and when moved.

- Only people with permission can open or edit the data.

- It works with Azure Active Directory so large companies with many workers can easily manage who has access.

This strong security makes it trusted by banks, hospitals and even governments.

Global Data Centers

Microsoft has one of the biggest networks of data centers in the world. Blob Storage lets you keep data closer to your users.

- This gives faster speed.

- If one region has a problem your data is still available from another region.

- It is very useful for businesses with customers in different countries.

Best For

Microsoft Azure Blob Storage is best for:

- Companies that already use Microsoft services like Office 365 Teams or Azure DevOps.

- Enterprises that want both local servers and cloud together.

- Businesses that want flexible cost options with Hot Cool and Archive tiers.

- Industries that need very strong security like banking healthcare and government.

3. Transform the Way You Store & Scale Data with S3 Compatible, High Performance Cloud Object Storage by Utho

- When exploring alternatives to Amazon S3, Utho Cloud Object Storage emerges as a strong competitor. It is designed with unlimited scalability, enterprise-grade durability, and advanced security, making it suitable for businesses across industries like AI/ML, e-commerce, finance, media, and healthcare.

- Unlike many storage solutions that struggle with growth and performance, Utho provides a scalable and durable architecture that simplifies management while ensuring data integrity and high performance. Whether your requirement is disaster recovery, application data storage, archival storage, or big data analytics, Utho offers the flexibility to handle it all.

Benefits of Choosing Utho Over Amazon S3 — Deep Dive

1) Lower Complexity

Utho’s scalable and durable architecture keeps storage management simple. As your data grows, capacity can be expanded seamlessly—without “lift and shift” complications. With customizable data management, you can set policies and structures according to your workload, making daily operations much easier.

Result: Reduced operational overhead, faster onboarding, and more time for teams to focus on core business activities.

2) More Cost-Efficient

With a pay-as-you-go model, you only pay for what you actually use. Flexible pricing removes the pressure of over-provisioning, while optimized performance prevents wasted costs due to inefficiencies.

Result: Predictable spending, better ROI, and tighter budget control—without hidden charges.

3) Enhanced Data Security

Utho provides data encryption, access controls, multi-factor authentication, audit trails, and intrusion detection—together forming enterprise-grade protection. This comprehensive security stack safeguards workloads from the application layer down to storage.

Result: Sensitive workloads (finance, healthcare, e-commerce, media) gain end-to-end protection, ensuring strong governance and compliance.

4) Business Flexibility

Whether you’re a startup or a large enterprise, Utho’s unlimited scalability and high performance fit equally well. API access enables straightforward integrations, while customizable management adapts to different team needs.

Result: Faster innovation for startups; predictable scaling for enterprises—all on one platform.

5) Better Integration

Automatic backup integration with platforms like cPanel and WordPress makes daily operations smoother—eliminating the need for manual scripts and backup cycles. API-based access allows frictionless plug-ins with existing tools, pipelines, and services.

Result: Faster setup, less maintenance, and smoother backup/restore tasks.

6) Disaster Recovery Ready

With multi-region availability, businesses can confidently design disaster recovery strategies. Even if one region experiences issues, workloads remain accessible. Data durability ensures data integrity even during hardware failures or power outages.

Result: Higher uptime, strong business continuity, and compliance-friendly resilience.

Ideal Use Cases — Where Utho Fits Best

1) Backup & Disaster Recovery

Automated backups (via cPanel/WordPress) combined with multi-region availability ensure quick restores and reliable disaster recovery drills. The durable architecture safeguards data against accidental loss and system failures.

Why it fits: Easy scheduling, reliable restores, and complete confidence during outages.

2) Big Data Analytics

Unlimited scalability makes it simple to store, ingest, and retain massive datasets. Optimized performance provides steady throughput to analytics engines, ensuring predictable query performance.

Why it fits: Scale without restructuring storage, and run analytics at high speed.

3) AI/ML Workloads

AI/ML pipelines need high-throughput object storage. Utho’s optimized performance supports model training, feature stores, and experiment tracking, while API access enables seamless pipeline integration.

Why it fits: Faster data access for training/inference and simplified MLOps workflows.

4) Media Storage & Streaming

Large media workloads demand durability and performance. Utho’s multi-region availability enhances global accessibility, ensuring smooth content delivery.

Why it fits: Reliable origin storage, consistent reads, and availability across geographies.

5) Archival & Long-Term Storage

Long-term storage requires cost-efficiency and reliability. With pay-as-you-go pricing and durable architecture, businesses can preserve data without overspending.

Why it fits: Budget-friendly data preservation with guaranteed integrity.

6) Application & E-commerce Data

From transactional logs to product media and user uploads, applications need secure and always-available storage. Utho’s advanced security and API access integrate seamlessly with application stacks.

Why it fits: Secure-by-default storage, clean integrations, and consistent performance.

With its scalability, cost-effectiveness, and enterprise-grade security, Utho Cloud Object Storage isn’t just an alternative to Amazon S3—it’s a smarter choice for businesses that want flexibility, resilience, and reliability without the hidden complexity.

Why Utho

- Fully Indian no foreign access

- Much cheaper than AWS GCP and Azure

- Works with S3 and supports easy migration

- Follows Indian laws keeping data safe and legal

- 24/7 support with real human help in India

Utho is more than just a storage service. It is India’s trusted cloud platform for all types of businesses. It is secure, fast , legal and affordable. Utho helps companies grow with confidence without worrying about security or compliance.

Features of Utho Object Storage

- Unlimited Scalability – Businesses can store any amount of data easily and pay only for what they use

- Ideal Use Cases – Good for virtual machines disaster recovery AI ML media e-commerce finance healthcare and more

- Scalable and Durable Architecture – Storage grows as data grows while keeping it reliable and simple to manage

- Optimized Performance – Fast and secure for business needs

- Data Durability – Data stays safe even during hardware failures power outages or technical problems

- Automatic Backup Integration – Works with platforms like cPanel and WordPress for easy backup

- Advanced Security – Data encryption access controls audit logs multi-factor authentication and intrusion detection keep information safe

- Customizable Data Management – Businesses can organize and manage data as they want

- Cost Efficiency – Pay only for what you use and save money

- API Access – Easy connection with applications and tools through API

- Multi-Region Availability – Data stays safe and accessible in case of disaster

- Use Cases – Backup and disaster recovery big data analytics media storage application data storage and archival storage

In short Utho Sovereign Cloud is India’s own cloud made for Indian businesses. It keeps data safe, follows the law, is affordable and fast. It is more than storage, it is a trusted platform that helps companies work safely, grow faster and stay in control of their data.

4. Backblaze B2 Cloud Storage – Simple and Affordable

Backblaze B2 is one of the easiest and cheapest cloud storage services. It is made to be simple, reliable and cost effective. This makes it perfect for developers, small businesses and media companies. They can store their data safely without dealing with the complexity of Amazon S3 or other big cloud platforms.

Key Features

1. Low Cost Storage

Backblaze B2 is very affordable. Startups, small businesses and content creators can store a lot of data without spending too much money. The price is low so companies can grow and store more data without worrying about big monthly bills.

2. Easy to Use

You do not need to be a cloud expert to use Backblaze B2. Creating storage spaces called buckets, uploading files and managing your data is simple and fast. Developers, small teams or anyone who likes easy tools can use it without problems.

3. Works with Many Tools

Backblaze B2 works with many popular backup tools, media applications and other software. You can use it for backups storing videos and images sharing files or team collaboration. It fits easily with existing work without extra effort.

4. Reliable and Secure

Data in Backblaze B2 is always safe. Even if one server or data center stops working your files stay accessible. This means businesses can trust their data is safe and work can continue without interruption.

Best For

- Developers who need simple storage for apps or backups

- Startups and small businesses who want affordable cloud storage

- Media companies who store large amounts of video audio or images

- Anyone who wants storage that is easy to manage reliable and safe

Why Choose Backblaze B2

- Cheap – Very affordable cloud storage

- Simple – Easy setup and management without technical problems

- Flexible – Works with many apps and tools

- Secure – Data is safe and always available

In short, Backblaze B2 is simple, cheap and reliable cloud storage. It is perfect for developers, startups, small and medium businesses and media companies. It gives hassle free storage so businesses can focus on their work without worrying about managing data.

5. IBM Cloud Object Storage – Very Simple Version

IBM Cloud Object Storage is a cloud storage service. It is strong, safe and made for big companies and organizations. It is good for keeping important and sensitive data safe. It also works with smart tools like AI to help businesses use their data

Key Features

High Security

IBM Cloud Storage keeps data safe. It protects data when it is stored and when it is being sent. This keeps financial records health data and important files safe from people who should not see them

Works with IBM Watson

IBM Cloud Storage works with IBM Watson. This means companies can use AI to study their data and get useful information. They can make better decisions predict trends and improve their services without moving data to another system

Flexible Storage Options

IBM Cloud Storage has different types of storage. You can pick fast storage for data you use often or long-term storage for data you use less. This helps businesses save money and pay only for what they need.

Compliance with Rules

IBM Cloud Storage follows strict rules. This is important for healthcare banks and government offices. It makes sure data is stored safely and legally reducing risks for the company

Best For

IBM Cloud Storage is good for:

-

- Big companies that handle important or regulated data

- Healthcare organizations that manage patient records

- Banks and financial institutions needing safe data storage

- Government offices that need secure reliable storage

- Companies that want AI and data insights built into storage

Why IBM Cloud Storage is Good

- Safe: Protects important data like a strong safe

- AI Tools: Works with IBM Watson for smart insights

- Flexible: Can choose fast or long-term storage

- Legal Safe: Follows healthcare finance and government rules

- Grows with You: Can store huge amounts of data

In short IBM Cloud Object Storage is safe, simple and smart cloud storage. It is perfect for big companies and organizations that need more than just storage. They need a system that keeps data safe, follows rules and helps them use data with AI.

6. DigitalOcean Spaces – Simple Cloud Storage for Everyone

DigitalOcean Spaces is a cloud storage service that is very simple, fast and affordable. It is made for developers, startups and small businesses who want to save and manage their data without complicated setup or high costs. Spaces also works like Amazon S3 so your apps or tools can work without changing them.

Easy to Start

DigitalOcean Spaces is very easy to use. You can create a storage bucket in just a few minutes and start uploading files. You do not need to be an expert or wait for days like other big cloud services. Even beginners can start storing files and managing data quickly.

You can organize your files in folders, set permissions for who can see or use them, and start using your storage immediately. There is no complicated setup.

Fast Access Anywhere

Spaces comes with a built-in Content Delivery Network (CDN). This means your files can load fast for users anywhere in the world. Whether your users are in India, Europe or America they can get your data quickly.

Fast access is very important for:

- Websites that have images or videos.

- Apps that need to show content quickly.

- Media platforms with heavy files.

This ensures your users do not have to wait and your apps run smoothly.

Works Like Amazon S3

DigitalOcean Spaces works like Amazon S3. Many apps and tools are built for S3. Normally moving to a new cloud would need changing a lot of code. But with Spaces:

- You can use the same S3 APIs and tools you already know.

- Your applications work without big changes.

- You can move your data from S3 easily.

This makes it simple to switch clouds without stopping your work. You get the benefits of S3 but in a way that is easier and cheaper.

Predictable and Affordable Pricing

Amazon S3 pricing can be confusing. There are extra costs for data transfer requests and hidden charges. DigitalOcean Spaces has simple and clear pricing. You always know what you will pay.

This is very helpful for startups and small businesses. They can plan their costs and budget without surprises. There are no hidden fees and no complex bills to worry about.

Who Should Use DigitalOcean Spaces

- Startups that want easy cloud storage without hiring a big IT team.

- Developers building apps or websites who need reliable storage.

- Small businesses who want fast content delivery without complicated setup.

- Anyone using S3-based applications who wants a simpler cheaper alternative.

Why DigitalOcean Spaces is a Good Choice

- Simple – easy to set up and use even for beginners.

- Fast – files and data load quickly anywhere in the world.

- S3 Compatible – works with apps designed for Amazon S3.

- Affordable – clear pricing with no hidden fees.

In Short

DigitalOcean Spaces gives you the power of Amazon S3 without the high cost or complexity. It is simple, fast , reliable and affordable.

It is perfect for startups, small businesses and developers who want S3-like cloud storage but without technical problems, expensive pricing or complicated setup.

7. Linode Object Storage (Akamai Cloud) – Very Simple Version

Linode Object Storage is a cloud storage service now part of Akamai Cloud. It is made to be flexible, reliable and cheap. It helps developers, startups and companies store data easily. Using Akamai’s network, Linode gives fast and steady access to data from anywhere in the world.

Key Features

Works with S3 Applications

Linode works like Amazon S3. Companies can move data or apps from AWS S3 without problems. This makes it easy to switch or use together with existing tools.

Available Worldwide

Linode uses Akamai’s network to give fast access to data all over the world. Users in Asia, Europe or America can get data quickly which helps apps run better.

Transparent Pricing

Linode has clear pricing and no hidden fees. Companies know how much they will pay. This is good for startups and small businesses.

Multi-Cloud Ready

Linode works well with other cloud providers. Companies can use it with multiple clouds without problems. This gives flexibility and freedom to design cloud storage the way they want.

Best For

Linode is good for developers startups and businesses that need:

- Storage that can grow with their needs.

- S3 compatibility to work with existing apps.

- Fast access worldwide through Akamai.

- Cheap and clear pricing.

Why Linode is Good

- Works with S3: Easy to switch from AWS.

- Global Access: Fast data everywhere.

- Clear Costs: No surprises.

- Flexible Multi-Cloud: Works with other cloud providers.

In short Linode Object Storage (Akamai Cloud) is reliable, flexible and fast. It is a good choice for businesses and developers who need cheap storage that works with S3 and multiple clouds.

8. Oracle Cloud Object Storage – Strong and Easy Cloud Storage for Big Companies

Oracle Cloud Object Storage is cloud storage made by Oracle for big companies. It is built to handle large amounts of data safely and efficiently.

Amazon S3 is very popular but Oracle Cloud is a better choice for companies that already use Oracle software like databases, ERP systems and other Oracle applications.

Oracle Cloud is secure, reliable , flexible with costs and very easy to use with Oracle tools. It is a strong alternative to Amazon S3 especially for large businesses.

Key Features

1. Different Storage Levels to Save Money

Oracle Cloud gives you different types of storage so companies can save money and still get their data when needed.

- Hot Storage – for files used every day that you need quickly. Example active files daily reports.

- Cool Storage – for files used sometimes but not every day. Example monthly backups.

- Archive Storage – for old files used rarely but kept for rules or compliance. Example historical logs or records.

This is similar to S3 Standard Infrequent Access and Glacier storage but Oracle Cloud is usually easier to understand and cheaper for big companies.

- Works Well with Oracle Applications

Oracle Cloud connects easily with Oracle databases and business software

If your company uses Oracle ERP HCM or databases, Oracle Cloud makes storing, moving and accessing data much easier.

You do not need complicated setup You do not need extra third-party tools

It saves time and reduces mistakes compared to Amazon S3.

- Helps Follow Rules and Regulations

Oracle Cloud Object Storage is built for industries with strict rules like finance, healthcare and government.

It helps companies follow important standards

- Finance – keeps banking and financial data safe.

- Healthcare – follows HIPAA rules for patient records.

- Government – protects sensitive or classified information.

Amazon S3 also supports compliance but Oracle Cloud is simpler for companies that already use Oracle software in regulated industries.

- Safe Reliable and Scalable

Oracle Cloud keeps your data safe and always available.

- Durable – makes multiple copies of your data so nothing is lost if hardware fails.

- Secure – encrypts data and controls who can see or change it.

- Scalable – lets you increase storage easily as your business grows without slowing apps.

Oracle Cloud is like S3 but designed to work smoothly with big Oracle systems

Best For

- Large companies that already use Oracle databases and software.

- Industries with strict rules like finance, healthcare and government.

- Companies with huge amounts of data that need cost-effective storage and easy scaling.

Why Oracle Cloud is a Good Alternative to S3

- Works easily with Oracle applications.

- Clear pricing and cheaper storage with tiered options.

- Strong compliance support for regulated industries.

- Secure, durable and scalable for large enterprises.

In Short

Amazon S3 is popular for many startups and developers but Oracle Cloud Object Storage is better for big companies using Oracle software or working in industries with strict rules.

It is not just storage, it is safe, reliable, cost-effective and fits fully into the Oracle software ecosystem.

9. Alibaba Cloud OSS – Simple and Strong Cloud Storage

Alibaba Cloud Object Storage Service or OSS is cloud storage made by Alibaba Cloud. Alibaba is the biggest cloud company in China and is growing fast across Asia. OSS is made to keep business data safe, reliable and very easy to use. It is also affordable for all types of companies.

OSS can store small amounts of data or very large amounts and can grow as your business grows. Many companies in Asia use OSS because it is fast, safe and follows local rules. This is very important for companies that must follow strict data laws.

Key Features

Works Like Amazon S3

OSS works like Amazon S3. Companies that already use S3 applications can move to OSS without changing their code. You do not need to rewrite apps or change how they work. Everything keeps running normally.

This makes it easy for developers and businesses to switch to OSS without stopping work or spending extra time.

Strong Presence in Asia Pacific

Alibaba Cloud has many data centers in China and across Asia Pacific. This gives businesses:

- Faster access because data is stored close to users.

- Lower delays so apps and websites work quickly.

- Compliance with local rules so companies meet regulations.

For companies with customers in Asia OSS is better than global cloud providers that may be far away.

Different Storage Types

OSS has different storage options depending on how often data is used:

- Hot Storage – For files used every day or needed quickly like daily reports or active files.

- Cold Storage – For files used sometimes at lower cost like monthly backups.

- Archive Storage – For old files rarely used but kept for rules or compliance like historical logs.

This helps companies save money and still keep data safe and ready when needed.

Safe and Reliable

OSS keeps multiple copies of data in different locations. This means your data is never lost even if a server fails. Security features like encryption and access control keep private data safe like financial records, customer information or important business files.

Companies can trust OSS to protect important data and still let apps and users access it quickly and reliably.

Best For

Alibaba Cloud OSS is great for companies in China or Asia Pacific especially those that:

- Need cloud storage that is safe and follows local rules.

- I want a cheaper alternative to Amazon S3.

- Need different storage types to save money and get fast access.

- Use S3 apps and want an easy platform to move their data.

OSS works well for startups, medium businesses and large companies because it grows as the business grows and keeps storage simple.

Why Alibaba Cloud OSS is Good

- Works with S3 apps so moving data is easy.

- Strong presence in Asia with local compliance.

- Hot cold and archive storage help save money.

- Safe, reliable and scalable for all business sizes.

In Short

Alibaba Cloud OSS is simple, fast, safe and affordable cloud storage. It is made for companies in Asia that want reliable storage that follows local rules.

OSS is one of the best alternatives to Amazon S3 for companies that need safe storage, fast performance and easy integration with apps. It is suitable for startups, medium businesses and large companies who want simple cloud storage that grows with them.

10. Wasabi Hot Cloud Storage – Simple Version

Wasabi Hot Cloud Storage is a place on the internet where you can keep your data safe. It is fast and costs less than big clouds like Amazon S3. It is good for startups, small businesses and medium companies that want safe storage without paying too much. Wasabi is simple, fast and works well so businesses can store lots of data easily.

Key Features

Predictable Pricing

With Wasabi you know how much you will pay every month. There are no hidden charges for getting your data or using special tools. This makes it easy for small businesses to plan their budget.

High Durability

Wasabi keeps your data very safe. It is almost impossible to lose data. Even if a server breaks your data is copied many times on other servers so it is always protected.

Works with S3 Applications

Wasabi works the same way as Amazon S3. If a business already uses Amazon S3 it can move to Wasabi without changing anything. This makes switching easy and smooth.

Low Cost

Wasabi is cheaper than most other big cloud providers. This is very helpful for small businesses or startups that need lots of storage but have little money.

Best For

Wasabi is best for startups, small businesses and medium companies that want safe and fast storage but do not want to spend too much. It helps businesses focus on growing instead of worrying about high cloud costs.

Why Wasabi is Good

- Simple: Easy to set up and use no hidden costs.

- Fast: Data can be accessed quickly.

- Safe: Very reliable so data is protected.

- Flexible: Works with Amazon S3 applications so moving data is easy.

In short, Wasabi Hot Cloud Storage is cheap, fast and safe. It is simple for businesses that want cloud storage without spending too much or dealing with complicated rules. It is a good alternative to Amazon S3 for companies that care about cost safety and easy use.

Final Thoughts – Easy Version

Amazon S3 is very popular and many businesses around the world use it. It is reliable, can grow with your business and has many features. But today businesses need more than just reliability. They also care about things like keeping data in their country following the law, saving money working fast and having special features.

The cloud options we talked about earlier give businesses more choices so they can pick what works best for them.

Clouds with Global Reach and Smart Features

Google Cloud Storage and Microsoft Azure Blob Storage are good for businesses that need access from many countries. They also have smart tools that use AI and machine learning to help companies understand data work faster and make good decisions. These clouds are great for companies that work all over the world and need strong tools to handle a lot of data.

Clouds that Save Money

Wasabi Hot Cloud Storage and Backblaze B2 are cheaper options. They keep data safe and costs are easy to predict. They do not have hidden fees or expensive charges like some big clouds. These are perfect for small businesses, media companies or developers who want safe storage without spending too much.

Sovereign and Local Clouds

Some businesses need to keep data in their own country because of laws and privacy. Utho Cloud is 100 percent Indian-owned and keeps all data in India. This is very important for startups, big companies and government organizations that must follow India's DPDP Act or want full control of sensitive information. Utho also gives good performance, lower costs and local support. This makes it the best choice for those who want data to stay in India and follow the rules.

Choosing the Right Cloud

Today businesses should not rely on just one cloud. They need to pick the right mix of clouds for their needs. They should think about it.

- Following rules and keeping data legal.

- Fast access and low delay for users.

- Saving money while getting good quality.

- Good support and reliable uptime.

- Growing with the business needs.

By thinking about these things businesses can make a plan using more than one cloud. This gives more control, lowers risks and helps the company grow safely.

In short, Amazon S3 is strong and useful. But businesses today benefit from looking at other cloud options. The right cloud depends on your goals, budget laws and plans for growth. Some clouds are good for global reach, some save money and some like Utho keep data local and safe. Choosing the right one helps your business work better now and in the future.